A great way to do a real-world disk test on your linux system is with a program called dd.

dd stands for data description and is used for copying data sources.

A simple command to do real-world disk write test in linux is:

dd bs=1M count=512 if=/dev/zero of=test conv=fdatasync

This creates a file named ‘test’ with all zeroes in it. The flag conv=fdatasync tells dd to sync the write to disk before it exits. Without this flag, dd will perform the write but some of it will remain in memory, not giving you an accurate picture of the true write performance of the disk.

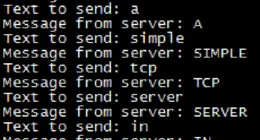

A sample of the run is below, with a simple SATA disk:

[14:11][root@server:~]$ dd bs=1M count=512 if=/dev/zero of=test conv=fdatasync

512+0 records in

512+0 records out

536870912 bytes (537 MB) copied, 5.19611 s, 103 MB/s

Now, there is a major caveat for using dd for disk benchmarking. The first is that it only tests filesystem access. Depending on your filesystem (I’m looking at your ZFS) the file write may itself just load into memory for writing later down the road. The same with a RAID controller on the system.

A much more accurate way of performing a disk benchmark is to use tools specifically geared towards this task. It will write much more data over a longer period of time. Bonnie++ is a particularly useful tool for this purpose.

Now don’t forget to remove that test file.

3 comments

If on a linux machine with 4GB of memory one could create a file that’s 5GB – would it be written directly to the disk w/out being loaded in memory ?

Maybe, but this is a bad way to go about it because if memory is ‘buffering’ the write, like it is designed to, you still do not get accurate disk speed times.

Nice. Thanks! <3 my server:

# dd bs=1M count=512 if=/dev/zero of=test conv=fdatasync

512+0 records in

512+0 records out

536870912 bytes (537 MB) copied, 0.590489 s, 909 MB/s

Comments are closed.