Server management is a funny thing. No matter how long you have been doing it, new interesting and unique challenges continue to pop up keeping you on your toes. This is a story about one of those challenges.

I manage a server which has a sole purpose: serving DNS requests. We use PowerDNS, which has been great. It is a DNS server whose backend is SQL, making administration of large numbers of records very easy. It is also fast, easy to use, open source and did I mention it is free?

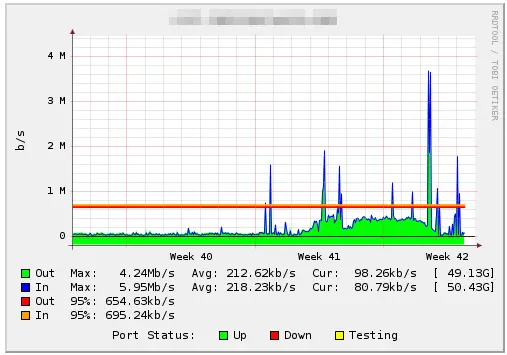

The server has been humming along for years now. The traffic graphs don’t show a lot of data moving through it because it only serves DNS requests (plus MySQL replication) in the form of tiny UDP packets.

We started seeing these spikes in traffic but everything on the server seemed to be working properly. Test connections with dig proved that the server was accurately responding to requests, but external tests showed the server going up and down.

The First Clue

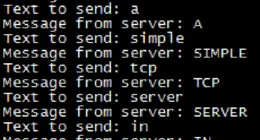

I started going through logs to see if we were being DoSed or if it was some sort of configuration problem. Everything seemed to be running properly and the requests, while voluminous, seemed to be legit. Within the flood of messages I spied error messages such as this:

printk: 2758 messages suppressed.

ip_conntrack: table full, dropping packet.

Ah ha! A clue! Let’s check the current numbers of ip_conntrack, which is a kernel function for the firewall which keeps tabs on packets heading into the system.

[root@ns1 log]# head /proc/slabinfo

slabinfo - version: 2.0

# name

ip_conntrack_expect 0 0 192 20 1 : tunables 120 60 8 : slabdata 0 0 0

ip_conntrack 34543 34576 384 10 1 : tunables 54 27 8 : slabdata 1612 1612 108

fib6_nodes 5 119 32 119 1 : tunables 120 60 8 : slabdata 1 1 0

ip6_dst_cache 4 15 256 15 1 : tunables 120 60 8 : slabdata 1 1 0

ndisc_cache 1 20 192 20 1 : tunables 120 60 8 : slabdata 1 1 0

rawv6_sock 4 11 704 11 2 : tunables 54 27 8 : slabdata 1 1 0

udpv6_sock 0 0 704 11 2 : tunables 54 27 8 : slabdata 0 0 0

tcpv6_sock 8 12 1216 3 1 : tunables 24 12 8 : slabdata 4 4 0

Continuing this line of logic, lets check our current value for this setting:

[root@ns1 log]# sysctl net.ipv4.netfilter.ip_conntrack_max

net.ipv4.netfilter.ip_conntrack_max = 34576

So it looks like we are hitting up against this limit. After the number of connections reaches this number, the kernel will simply drop the packet. It does this so that it will not overload and freeze up due to too many packets coming into it at once.

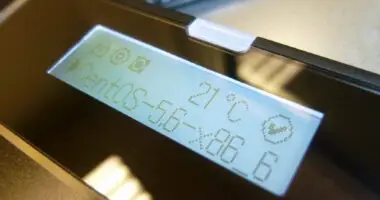

This system is running on CentOS 4.8, and since then newer versions of RHEL5 have the default set at 65536. For maximum efficiency we keep this number at multiples of 2. The top size depends on your memory, so just be careful as overloading it may cause you to run out of it.

Fixing The ip_conntrack Bottleneck

In my case I decided to go up 2 steps to 131072. To temporarily set it, use sysctl:

[root@ns1 log]# sysctl -w net.ipv4.netfilter.ip_conntrack_max=131072

net.ipv4.netfilter.ip_conntrack_max = 131072

Test everything out, if you have some problems with your network or system crashing, a reboot will set the value back to normal. To make the setting permanent on reboot, add the following line to your [cc inline=”1″]/etc/sysctl.conf

file:

# need to increase this due to volume of connections to the server

net.ipv4.netfilter.ip_conntrack_max=131072

My theory is that since the server was dropping packets, remote hosts were re-sending their DNS requests causing a ‘flood’ of traffic to the server and the spikes you see in the traffic graph above whenever traffic was mildly elevated. The bandwidth spikes were caused by amplification of traffic due to resending of the requests. After increasing ip_conntrack_max I immediately saw the bandwidth resume to normal levels.

Your server should now be set against an onslaught of tiny packets, legitimate or not. If you have even more connections than what you can safely track with ip_conntrack you may need to move to the next level which involves hardware firewalls and other methods for packet inspection off-server on dedicated hardware.

Some resources used in my investigation of this problem:

[1] http://wiki.khnet.info/index.php/Conntrack_tuning

[2] http://serverfault.com/questions/111034/increasing-ip-conntrack-max-safely

[3] http://www.linuxquestions.org/questions/red-hat-31/ip_conntrack-table-full-dropping-packet-615436/

The image of the kittens used for the featured image has nothing to do with this post. There are no known good photos of a “UDP Packet”, and I thought that everyone likes kittens, so there it is. Credit flickr user mathias-erhart.

1 comment

Comments are closed.