The Latest

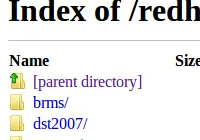

Evaluating FTP Servers: ProFTPd vs PureFTPd vs vsftpd

Usually, I will try to push clients towards using SCP (via a client such as WinSCP), however inevitably there are clients who do not understand this new method of accessing their files securely online, and who for one reason or another insist on using FTP for their online file access. As they say – the customer is always right?