The Latest

Firesheep Should Be A Call To Arms For System, Network & Web Admins

Firesheep by Eric Butler has just been released to the world. This Firefox plugin does a few things that have already been fairly easy to do for a while, but rolled up in one easy to use package:

Sniffs data on unencrypted Wireless Networks

Looks for unencrypted login cookies sent to known popular insecure sites

Allows you to login to that account with ‘One Click’

So what sites are impacted by default? Amazon.com, Basecamp, bit.ly, Cisco, CNET, Dropbox, Enom, Evernote, Facebook, Flickr, Github, Google, HackerNews, Harvest, Windows Live, NY Times, Pivotal Tracker, Slicehost, tumblr, Twitter, WordPress, Yahoo, and Yelp are among the few. A plugin system allows anyone to add their own sites (and cookie styles) to the plugin.

Yikes! It goes without saying that this is a major security problem for anyone who uses unencrypted wireless networks. Includes on this list are many universities and companies such as Starbucks.

Fixing ip_conntrack Bottlenecks: The Tale Of The DNS Server With Many Tiny Connections

I manage a server which has a sole purpose: serving DNS requests. We use PowerDNS, which has been great. It is a DNS server whose backend is SQL, making administration of large numbers of records very easy. It is also fast, easy to use, open source and did I mention it is free?

The server has been humming along for years now. The traffic graphs don’t show a lot of data moving through it because it only serves DNS requests (plus MySQL replication) in the form of tiny UDP packets.

Read on to follow my story of how I fixed this tricky problem. No kittens were harmed in the writing of this post.

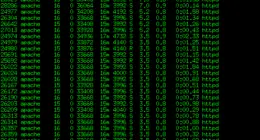

How to Stop an Apache DDoS Attack with mod_evasive

The first inkling that I had a problem with a DDoS (Distributed Denial of Service) attack was a note sent to my inbox:

lfd on server1.myhostname.com: High 5 minute load average alert – 89.14

My initial thought was that a site on my server was getting Slashdotted or encountering the Digg or Reddit effect. I run Chartbeat on several sites where this occasionally happens and I will usually get an alert from them first. A quick look at the Extended status page from Apache showed that I had a much different kind of problem.

The Ultimate Guide to DVD Encoding with Handbrake

It’s no secret that I’m a huge fan of Handbrake. After committing to copying my DVD collection to my storage array, I’ve tried and tested just about all software out there for converting video to H.264 with an emphasis on quality and speed. Many software packages have problems with quality or desynchronized audio, Handbrake is my hands-down favorite when it comes down to converting video — and that includes both free and commercial software.

One of the complaints I hear about Handbrake is that there are too many options. Well, the good news for someone looking for simplicity is that the built-in presets mostly take care of them for you. And for anyone who likes to dive into the nitty gritty of video compression, it also allows for a lot of tweaking to get the most out of your movie while maintaining small file sizes and high quality.

Read on for my full guide to Handbrake features.