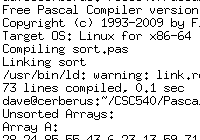

What a Resilver Looks Like in ZFS (and a Bug and/or Feature)

At home I have an (admittedly small) ZFS array set up to experiment with this neat newish raid technology. I think it has been around long enough that it can be used in production, but I’m still getting used to the little bugs/features, and here is one that I just found.

After figuring out that I had 2 out of 3 of my 1TB Seagate Barracuda hard drives fail, I had to give the array up for a loss and test out my backup strategy. Fortunately it worked and there was no data loss. After receiving the replacement drives in from RMA, I rebuilt the ZFS array (using raidz again) and went along my merry way. After 6 months or so, I started getting some funky results from my other drive. Thinking it might have some issue as with the others, I removed the drive and ran Seatools on it (by the way, Seatools doesn’t offer a 64-bit Windows version – what year is this?).

The drive didn’t show any signs of failure, so I decided to wipe it and add it back into the array to see what happens. That, of course, is easier said than done.